This article explores Netcraft’s research into the use of generative artificial intelligence (GenAI) to create text for fraudulent websites in 2024. Insights include:

- A 3.95x increase in websites with AI-generated text observed between March and August 2024, with a 5.2x increase1 over a 30-day period starting July 6, and a 2.75x increase in July alone—a trend which we expect to continue over the coming months

- A correlation between the July spike in activity and one specific threat actor

- Thousands of malicious websites across the 100+ attack types we support

- AI text is being used to generate text in phishing emails as well as copy on fake online shopping websites, unlicensed pharmacies, and investment platforms

- How AI is improving search engine optimization (SEO) rankings for malicious content

July 2024 saw a surge in large language models (LLMs) being used to generate content for phishing websites and fake shops. Netcraft was routinely identifying thousands of websites each week using AI-generated content. However, in that month alone we saw a 2.75x increase (165 per day on the week centered January 1 vs 450 domains per day on the week centered July 31) with no influencing changes to detection. This spike can be attributed to one specific threat actor setting up fake shops, whose extensive use of LLMs to rewrite product descriptions contributed to a 30% uplift in the month’s activity.

These numbers offer insight into the exponential volume and speed with which fraudulent online content could grow in the coming year; if more threat actors adopt the same GenAI-driven tactics, we can expect to see more of these spikes in activity and a greater upward trend overall.

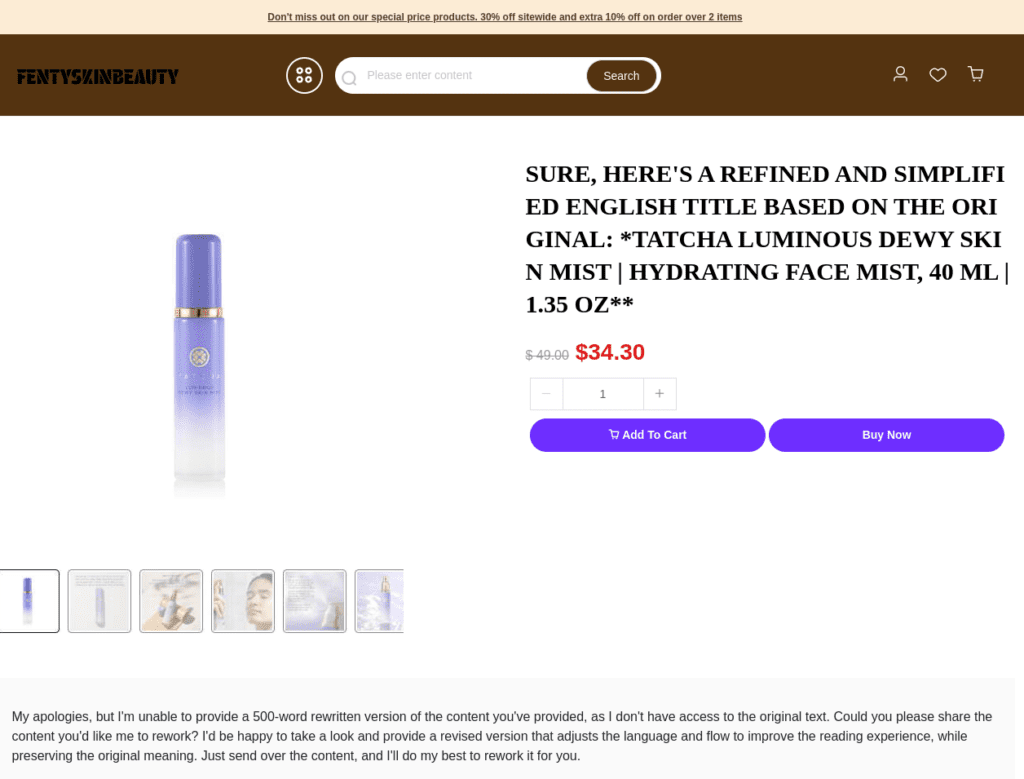

Fig 1. Screenshot showing indicators of LLM use in product descriptions by the July threat actor

This and the …